README.md

1# Virtual Camera

2

3The virtual camera feature allows 3rd party application to expose a remote or

4virtual camera to the standard Android camera frameworks (Camera2/CameraX, NDK,

5camera1).

6

7The stack is composed into 4 different parts:

8

91. The **Virtual Camera Service** (this directory), implementing the Camera HAL

10 and acts as an interface between the Android Camera Server and the *Virtual

11 Camera Owner* (via the VirtualDeviceManager APIs).

12

132. The **VirtualDeviceManager** running in the system process and handling the

14 communication between the Virtual Camera service and the Virtual Camera

15 owner

16

173. The **Virtual Camera Owner**, the client application declaring the Virtual

18 Camera and handling the production of image data. We will also refer to this

19 part as the **producer**

20

214. The **Consumer Application**, the client application consuming camera data,

22 which can be any application using the camera APIs

23

24This document describes the functionalities of the *Virtual Camera Service*

25

26## Before reading

27

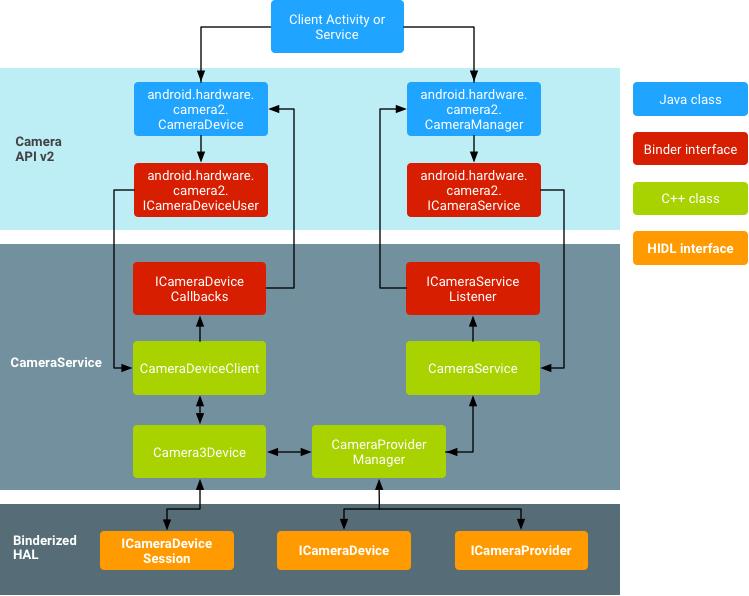

28The service implements the Camera HAL. It's best to have a bit of an

29understanding of how it works by reading the

30[HAL documentation first](https://source.android.com/docs/core/camera)

31

32

33

34The HAL implementations are declared in: -

35[VirtualCameraDevice](./VirtualCameraDevice.h) -

36[VirtualCameraProvider](./VirtualCameraProvider.h) -

37[VirtualCameraSession](./VirtualCameraSession.h)

38

39## Current supported features

40

41Virtual Cameras report `EXTERNAL`

42[hardware level](https://developer.android.com/reference/android/hardware/camera2/CameraCharacteristics#INFO_SUPPORTED_HARDWARE_LEVEL)

43but some

44[functionalities of `EXTERNAL`](https://developer.android.com/reference/android/hardware/camera2/CameraMetadata#INFO_SUPPORTED_HARDWARE_LEVEL_EXTERNAL)

45hardware level are not fully supported.

46

47Here is a list of supported features - Single input multiple output stream and

48capture:

49

50- Support for YUV and JPEG

51

52Notable missing features:

53

54- Support for auto 3A (AWB, AE, AF): virtual camera will announce convergence

55 of 3A algorithm even though it can't receive any information about this from

56 the owner.

57

58- No flash/torch support

59

60## Overview

61

62Graphic data are exchanged using the Surface infrastructure. Like any other

63Camera HAL, the Surfaces to write data into are received from the client.

64Virtual Camera exposes a **different** Surface onto which the owner can write

65data. In the middle, we use an EGL Texture which adapts (if needed) the producer

66data to the required consumer format (scaling only for now, but we might also

67add support for rotation and cropping in the future).

68

69When the client application requires multiple resolutions, the closest one among

70supported resolutions is used for the input data and the image data is down

71scaled for the lower resolutions.

72

73Depending on the type of output, the rendering pipelines change. Here is an

74overview of the YUV and JPEG pipelines.

75

76**YUV Rendering:**

77

78```

79Virtual Device Owner Surface[1] (Producer) --{binds to}--> EGL

80Texture[1] --{renders into}--> Client Surface[1-n] (Consumer)

81```

82

83**JPEG Rendering:**

84

85```

86Virtual Device Owner Surface[1] (Producer) --{binds to}--> EGL

87Texture[1] --{compress data into}--> temporary buffer --{renders into}-->

88Client Surface[1-n] (Consumer)

89```

90

91## Life of a capture request

92

93> Before reading the following, you must understand the concepts of

94> [CaptureRequest](https://developer.android.com/reference/android/hardware/camera2/CaptureRequest)

95> and

96> [OutputConfiguration](https://developer.android.com/reference/android/hardware/camera2/OutputConfiguration).

97

981. The consumer creates a session with one or more `Surfaces`

99

1002. The VirtualCamera owner will receive a call to

101 `VirtualCameraCallback#onStreamConfigured` with a reference to another

102 `Suface` where it can write into.

103

1043. The consumer will then start sending `CaptureRequests`. The owner will

105 receive a call to `VirtualCameraCallback#onProcessCaptureRequest`, at which

106 points it should write the required data into the surface it previously

107 received. At the same time, a new task will be enqueued in the render thread

108

1094. The [VirtualCameraRenderThread](./VirtualCameraRenderThread.cc) will consume

110 the enqueued tasks as they come. It will wait for the producer to write into

111 the input Surface (using `Surface::waitForNextFrame`).

112

113 > **Note:** Since the Surface API allows us to wait for the next frame,

114 > there is no need for the producer to notify when the frame is ready by

115 > calling a `processCaptureResult()` equivalent.

116

1175. The EGL Texture is updated with the content of the Surface.

118

1196. The EGL Texture renders into the output Surfaces.

120

1217. The Camera client is notified of the "shutter" event and the `CaptureResult`

122 is sent to the consumer.

123

124## EGL Rendering

125

126### The render thread

127

128The [VirtualCameraRenderThread](./VirtualCameraRenderThread.h) module takes care

129of rendering the input from the owner to the output via the EGL Texture. The

130rendering is done either to a JPEG buffer, which is the BLOB rendering for

131creating a JPEG or to a YUV buffer used mainly for preview Surfaces or video.

132Two EGLPrograms (shaders) defined in [EglProgram](./util/EglProgram.cc) handle

133the rendering of the data.

134

135### Initialization

136

137[EGlDisplayContext](./util/EglDisplayContext.h) initializes the whole EGL

138environment (Display, Surface, Context, and Config).

139

140The EGL Rendering is backed by a

141[ANativeWindow](https://developer.android.com/ndk/reference/group/a-native-window)

142which is just the native counterpart of the

143[Surface](https://developer.android.com/reference/android/view/Surface), which

144itself is the producer side of buffer queue, the consumer being either the

145display (Camera preview) or some encoder (to save the data or send it across the

146network).

147

148### More about OpenGL

149

150To better understand how the EGL rendering works the following resources can be

151used:

152

153Introduction to OpenGL: https://learnopengl.com/

154

155The official documentation of EGL API can be queried at:

156https://www.khronos.org/registry/egl/sdk/docs/man/xhtml/

157

158And using Google search with the following query:

159

160```

161[function name] site:https://registry.khronos.org/EGL/sdk/docs/man/html/

162

163// example: eglSwapBuffers site:https://registry.khronos.org/EGL/sdk/docs/man/html/

164```

165